E is for Exploratory Data Analysis: Images

What is Exploratory Data Analysis (EDA), why is it done, and how do we do it in Python?

While my previous posts outlined methods for conducting EDA for numerical and categorical data, this post focuses on EDA for images.

What is Exploratory Data Analysis (EDA)?

Again, since all learning is repetition, EDA is a process by which we 'get to know' our data by conducting basic descriptive statistics and visualizations.

Why is it done for images?

We need to know:

- how many images we have

- if they labeled appropriately (assuming we're doing supervised learning)

- their format (i.e. size and color)

How do we do it in Python?

Step 1: Frame the Problem

"Is it possible to determine the minimum age a reader should be for a given book based solely on the cover?"

Step 2: Get the Data

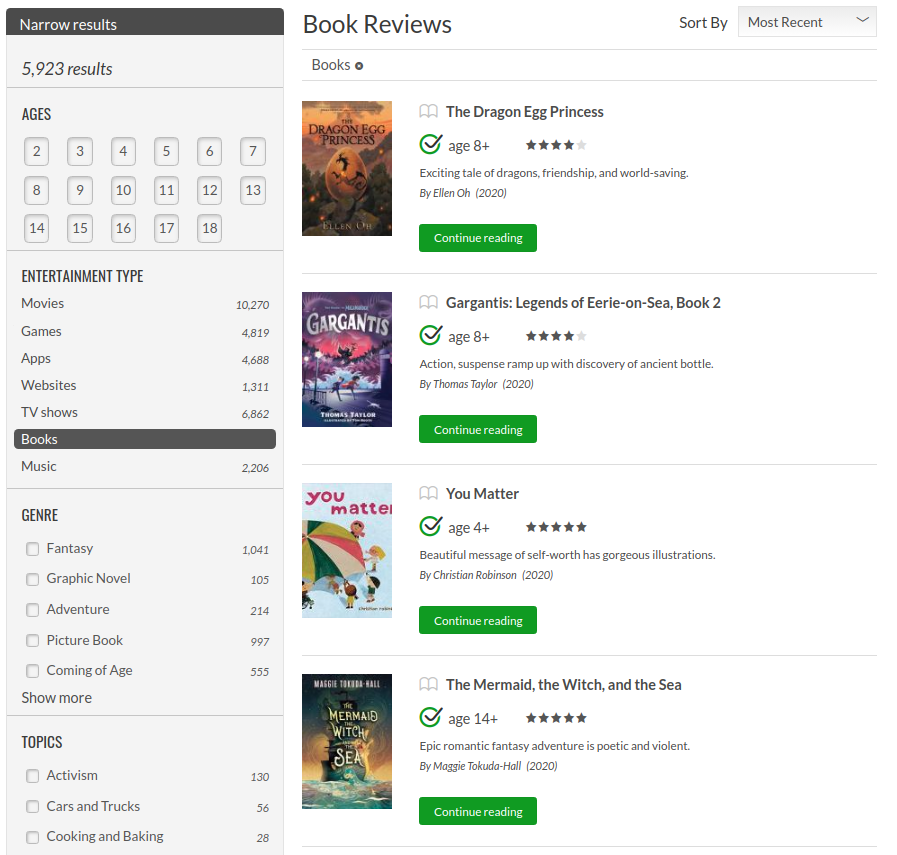

As mentioned in my previous posts, I sourced labeled training data from Common Sense Media's Book Reviews by scraping and saving the target pages using BeautifulSoup

and then extracted and saved the book covers into a separate folder.

In the end, I was able to use over 5000 covers for training and testing purposes, but today we'll work with a subsample of the covers which can be downloaded from here.

Step 3: Explore the Data to Gain Insights (i.e. EDA)

As always, import the essential libraries, then load the data.

import pandas as pd

import numpy as np

import os

import cv2

import ipyplot

IMAGES_PATH = "data/covers/"

image_files = list(os.listdir(IMAGES_PATH))

full_file_paths = [IMAGES_PATH+image for image in image_files]

print("Number of image files: {}".format(len(image_files)))

What does our target look like?

To answer that question, we can create a data frame of the book titles and the target ages in our sample, and then plot the target.

Since I scraped the data, I know the beginning of the file name is the target age, (e.g., 13 is the minimum age for the file '13_dance-of-thieves-book-1.jpg') so we can create a data frame of the:

- file names

- full paths

- and a target column called

ageby splitting the file name on the underscore and extracting the first element

data = {'files':image_files, 'full_path':full_file_paths}

df = pd.DataFrame(data=data)

df['age'] = df['files'].str.split("_").str[0].astype('int')

df.head()

Now we can plot the age feature.

df['age'].plot(kind= "hist",

bins=range(2,18),

figsize=(24,10),

xticks=range(2,18),

fontsize=16);

Thankfully, the plot above has a nearly identical distribution to the entire sample (see this post) so all is good and we can continue.

What do our covers look like?

We know the general shape of our target, but let's get a feel for what the targets (i.e. the book covers) look like by using the IPyPlot package.

To do so, we convert the path to the images and the target numpy arrays:

images = df['full_path'].to_numpy()

labels_int = df['age'].to_numpy()

and then pass them as arguments to the plot_class_representations function which will return the first instance of each of our targets.

In other words, the function will print the first book which rated for 2 year olds, 3 year olds, 4 year olds, (etcetera) until all levels of the target are represented.

ipyplot.plot_class_representations(images=images, labels=labels_int, force_b64=True)

![]() There seems to be a correlation between the dimensions of the books and the target age; books for younger readers tend to be square whereas books for older readers are usually rectangular.

There seems to be a correlation between the dimensions of the books and the target age; books for younger readers tend to be square whereas books for older readers are usually rectangular.

Let's investigate that further by plotting multiple covers per age which we can do by using the plot_class_tabs function.

ipyplot.plot_class_tabs(images=images, labels=labels_int, max_imgs_per_tab=4, force_b64=True)

![]() Hmmmmm. Could be true but we'll need more evidence to be certain.

Hmmmmm. Could be true but we'll need more evidence to be certain.

What are the sizes and channels of our covers?

The size of our covers will be the height and width of our images while the number of channels is whether the cover is in color.

"Why do we care?"

It is crucial to understand the size of our covers because when we create our convolutional neural network (CNN), we'll need to set the input shape which is the height, width, and number of channels of our images1. Furthermore, when we create our model, we'll either need to normalize our images2, meaning every cover has the same dimensions, or create multiple inputs.

"So where do we start?"

First create a list of arrays of the covers:

covers = [cv2.imread(IMAGES_PATH+image) for image in image_files]

Congratulations! All of our covers are now stored as a list of arrays of pixel data so we can use shape to inspect the dimensions of our covers.

For example, the first cover in our collection is Dance of Thieves which looks like this:

import matplotlib.pyplot as plt

sample = df.iloc[0,1]

sample_img = cv2.imread(sample)

plt.imshow(sample_img)

plt.xticks([]), plt.yticks([]) # to hide tick values on X and Y axis

plt.show()

When we call shape on it, we get a tuple like in the output below:

covers[0].shape

"What does this tuple contain?"

- the height and width (i.e., rows and columns) measured in pixels

- the number of channels (i.e., RGB meaning red, green, blue)

![]() If our image is in grayscale, the tuple returns just the height and width.

If our image is in grayscale, the tuple returns just the height and width.

Therefore, from the output above, we know Dance of Thieves is 255 pixels high by 170 pixels wide, and is in color.

"But what about the rest of the covers?"

Glad you asked.

Get the Dimensions of All Covers

Since we know the order of the elements of the tuple, (i.e., height, width, channel), we can use indexing and list comprehension to get the dimensions of our covers like this:

height = [cover.shape[0] for cover in covers]

width = [cover.shape[1] for cover in covers]

channels = [cover.shape[2] for cover in covers]

If I were to use a for loop, which I don't recommend because they are slower, I would do so like this:

width = []

height = []

channels = []

for cover in covers:

img = cover.shape

height.append(img[0])

width.append(img[1])

channels.append(img[2])

and then add it to our data frame.

df['width'] = width

df['height'] = height

df['channels'] = channels

df.head()

Excellent! Now we can start answering some questions like:

![]() Are all the covers in color?

Are all the covers in color?

df.channels.value_counts()

Yes, yes they are.

![]() Are all the covers the same width?

Are all the covers the same width?

set(df['width'])

Yes, yes they are.

But...

![]() Are all the covers the same height?

Are all the covers the same height?

set(df['height'])

No, no they are not.

Since all the book covers are 170 pixels wide, books which are 170 pixels in height are a square and, based on domain knowledge, a great number of books for very young children are square. To that end, I'm wondering...

![]() What's the average age based on the height of the book

What's the average age based on the height of the book

df[['age', 'height']].groupby('height').describe().round()

Interesting, but what does this look like when we plot it?

df[['age', 'height']].hist(by='height', sharex=True, sharey=True, bins=range(2,18), figsize=(10,5));

Based on the plot above, it would certainly appear there is a correlation between the height of a book and the target age.

We can quantify that relationship like this:

df[['age', 'height']].corr()

Now while people in the hard sciences would probably scoff at a correlation of .5, I, coming from language education see a .5 and get excited. Therefore, when we make our model, we'll want to add the height of our book as a feature.

Summary

-

numeric data

numeric data -

categorical data

categorical data

-

images

images

That's a wrap for EDA ![]()

While I've explored several techniques and multiple libraries in this series, the whole project can be summarized as "be sure to get to know your data."

Happy coding!