E is for Exploratory Data Analysis: Numeric Data

What is Exploratory Data Analysis (EDA), why is it done, and how do we do it in Python?

What is Exploratory Data Analysis(EDA)?

EDA is the process of getting to know our data primarily through simple visualizations before fitting a model. As Wickham and Grolemund state, EDA is more an attitude than a scripted list of steps which must be carried out1.

Why is it done?

Two main reasons:

-

If we collected the data ourselves to solve a problem, we need to determine whether our data is sufficient for solving that problem.

-

If we didn't collect the data ourselves, we need to have a basic understanding of the type, quantity, quality, and possible relationships between the features in our data.

How do we do it in Python?

While I could use a toy data set, like in my last post, after seeing seeing tweets like this:

1. Isn’t iris the iris of ML

— David Robinson (@drob) July 23, 2018

2. Isn’t gapminder the iris of EDA

and listening to Hugo Bowne-Anderson on DataFramed bemoan the over use of the Iris and Titanic datasets, I'm feeling inspired to use my own data ![]()

As always, I'll follow the steps outlined in Hands-on Machine Learning with Scikit-Learn, Keras & TensorFlow

Step 1: Frame the Problem

"Given a set of features, can we determine how old someone needs to be to read a book?"

Step 2: Get the Data

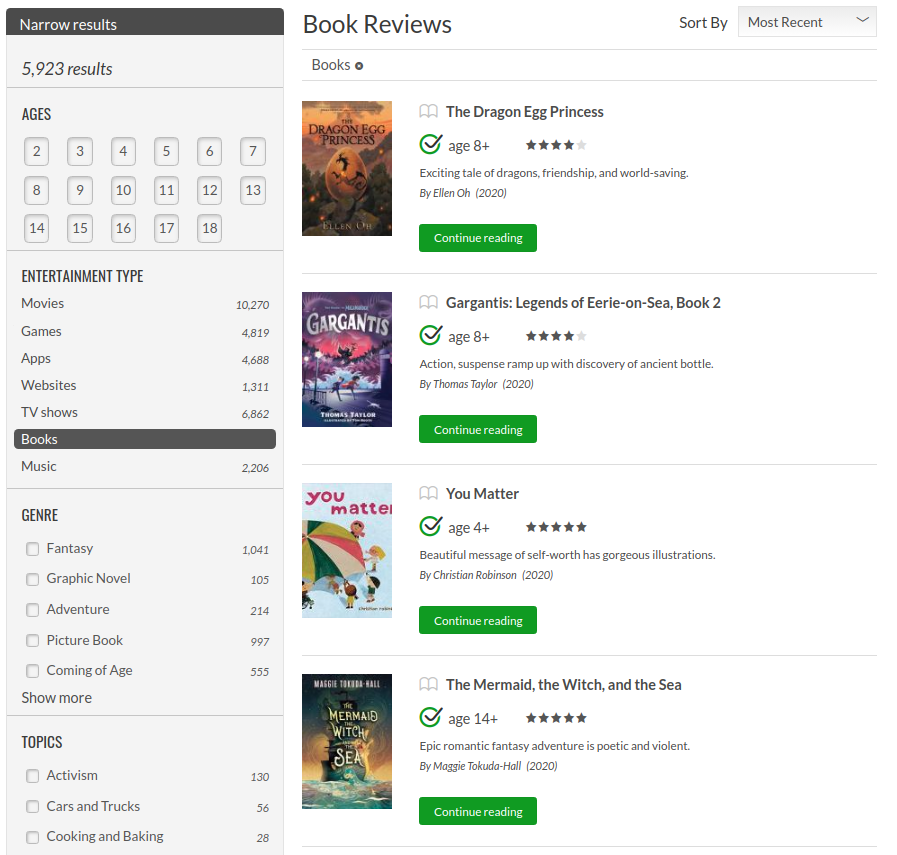

To answer the question above, I sourced labeled data by scraping Common Sense Media's Book Reviews using BeautifulSoup and then wrote the data to a csv.2

Now that we have our data lets move on to...

Step 3: Explore the Data to Gain Insights (i.e. EDA)

As always, import the essential libraries, then load the data.

#For data manipulation

import pandas as pd

import numpy as np

#For visualization

import seaborn as sns

import matplotlib.pyplot as plt

import missingno as msno

url = 'https://raw.githubusercontent.com/educatorsRlearners/book-maturity/master/csv/book_info_complete.csv'

df = pd.read_csv(url)

Time to start asking and answering some basic questions:

- How much data do we have?

df.shape

OK, so we have 23 features and one target as well as 5,816 observations.

Why do we have fewer than in the screenshot above?

Because Common Sense Media is constantly adding new reviews to their website, meaning they've added nearly 100 books to their site since I completed my project at the end of March 2020.

- What type of data do we have?

df.info()

Looks like a mix of strings and floats.

Lets take a closer look.

df.head().T

The picture is coming into focus. Again, since I collected the data, I know that the target is csm_rating which is the minimum age Common Sense Media (CSM) says a reader should be for the given book.

Also, we have essentially three types of features:

- Numeric

-

par_rating: Ratings of the book by parents -

kids_rating: Ratings of the book by children -

csm_rating: Ratings of the books by Common Sense Media -

Number of pages: Length of the book -

Publisher's recommended age(s): Self explanatory

-

- Date

-

Publication date: When the book was published -

Last updated: When the book's information was updated

-

with all other features being text.

To make inspecting a little easier, lets clean those column names. 3

df.columns

df.columns = df.columns.str.strip().str.lower().str.replace(' ', '_').str.replace('(', '').str.replace(')', '')

df.columns

Much better.

Given the number and variety of features, I'll focus on the numeric features in this post and analyze the text features in a part II.

Therefore, lets subset the data frame work with only the features of interest.

numeric = ['par_rating', 'kids_rating', 'csm_rating', 'number_of_pages', "publisher's_recommended_ages"]

df_numeric = df[numeric]

df_numeric.head()

![]()

publisher's_recommended_ages is a range instead of a single value.

![]() It's the wrong data type (i.e., non-numeric)

It's the wrong data type (i.e., non-numeric)

df_numeric.dtypes

![]() We can fix both of those issues.

We can fix both of those issues.

Given that we only care about the minimum age, we can:

- split the string on the hyphen

- keep only the first value since that will be the minimum age recommended by the publisher

- convert the column to

numeric

#Create a column with the minimum age

df_numeric['pub_rating'] = df.loc[:, "publisher\'s_recommended_ages"].str.split("-", n=1, expand=True)[0]

#Set the column as numeric

df_numeric.loc[:, 'pub_rating'] = pd.to_numeric(df_numeric['pub_rating'])

df_numeric.head().T

Now we can drop the unnecessary column.

df_numeric = df_numeric.drop(columns="publisher's_recommended_ages")

df_numeric.head().T

df_numeric.loc[:, 'csm_rating'].describe()

Good news! We do not have any missing values for our target! Also, we can see the lowest recommended age for a book is 2 years old, which has to be a picture book, while the highest is 17.

All useful info, but what does our target look like?

df_numeric.loc[:, 'csm_rating'].plot(kind= "hist",

bins=range(2,18),

figsize=(24,10),

xticks=range(2,18),

fontsize=16);

Hmmm. Two thoughts:

First, the distribution is multimodal so when we split the data intotrain-test-validate splits, we'll need to do a stratified random sample. Also, the book recommendations seem to fall into one of three categories: really young readers, (e.g., 5 years old), tweens, and teens or older.

![]() Given this distribution, we could simplify our task from predicting an exact age and instead predict an age group. Something to keep in mind for future research.

Given this distribution, we could simplify our task from predicting an exact age and instead predict an age group. Something to keep in mind for future research.

Moving on.

msno.bar(df_numeric);

Good News!

- There are fewer than 50 missing values for

number_of_pages

Bad News!

-

pub_ratingis missing a thousand values - Nearly half of the

kids_ratingare missing - More than half of the

par_ratingare missing.

When we get to the cleaning/feature engineering stage, we'll have to decide whether it's better to drop or impute) the missing values. However, before we do that, lets see visualize the data to get a better feel for it.

df_numeric['kids_rating'].plot(kind= "hist",

bins=range(2,18),

figsize=(24,10),

xticks=range(2,18),

fontsize=16);

Hmmm. Looks like the children who wrote the bulk of the reviews think the books they reviewed were suitable for children between the ages of 8 and 14.

What about the parent's ratings?

df_numeric['par_rating'].plot(kind= "hist",

bins=range(2,18),

figsize=(24,10),

xticks=range(2,18),

fontsize=16);

Same shape as the kids but a little less pronounced?

Finally, let's find out what the publishers think.

df_numeric['pub_rating'].plot(kind= "hist",

bins=range(2,18),

figsize=(24,10),

xticks=range(2,18),

fontsize=16);

This looks promising. Let's compare it to our target csm_rating.

df_numeric.loc[:, ['pub_rating','csm_rating']].hist(bins=range(2,18),

figsize=(20,10));

![]() While not identical by any means, both distributions have the same multimodal shape.

While not identical by any means, both distributions have the same multimodal shape.

Lets see how well our features correlate.

#Create the correlation matrix

corr = df_numeric.corr()

#Generate a mask to over the upper-right side of the matrix

mask = np.zeros_like(corr)

mask[np.triu_indices_from(mask)] = True

#Plot the heatmap with correlations

with sns.axes_style("white"):

f, ax = plt.subplots(figsize=(8, 6))

ax = sns.heatmap(corr, mask=mask, annot=True, square=True)

![]() The

The pub_rating is nearly a proxy for the target.

![]() The parents and kids ratings correlate more strongly with the target than they do with each other.

The parents and kids ratings correlate more strongly with the target than they do with each other.

Looks like we're going to have to impute those missing values after all.

What about potential outliers?

A final step in EDA is to search for potential outliers which are values significantly different from the norm.

Our dataset could contain outliers for a couple of reasons:

- data is miskeyed in, (e.g., someone types "100" instead of "10")

- the observation genuinely is significantly different from the norm 4

The good news is that I chose to scrape Common Sense Media's book reviews because I felt confident in their ratings based on my knowledge of the domain and, given the professionalism of the organization, we can be fairly certain of the veracity of the data.

However, the old maxim of "Trust, but verify" exists for a reason.

This post is already much longer than I had planned so I won't go through all the numeric features, nor the multiple ways of identifying outliers, but a really simple one to plot the relationships between features so lets investigate the relationship between ratings and book length.

df_numeric.plot.scatter(x='number_of_pages',

y="csm_rating",

figsize=(24,10),

fontsize=16);

We have spotted our first probable outliers: it is inconceivable for a ~400 page book to be meant for a two or three year old, right?

df.query('csm_rating < 6 & number_of_pages > 300')[['title','description']]

Well so much for that idea ![]()

To quote the AI Guru, instead of simply relying on printouts and plots, you "should always look at your bleeping data."

Summary

This post turned out to be part one of what will likely be at least three posts: EDA for:

-

numeric data

numeric data -

categorical data

categorical data -

images (book covers)

images (book covers)

Going forward, my key points to remember are:

Does the shape of the data make sense?

Based on my problem statement, I do not need normally distributed data. However, based on the question I'm trying to solve, I might expect the data to fit a certain distribution.

Similarly, are the values what I expect?

What would have happened if the only ratings I had were for 4 year olds? Clearly, I would have made a mistake somewhere along the line and would have to go back and fix it.

Also, I have to ask if the data makes sense or if I have outliers.

What's missing?

There will always be missing values. How many and in which features is going to drive a lot of feature engineering questions.

Speaking of which...

Are all the features I want present?

The numeric features I have are pretty complete, but what would happen if I combined the par_rating with the kids_rating to create a new feature? Would the two features combined be more valuable than either one on its own? Only one way to find out ![]()

Happy coding!

Footnotes

1. Chapter 7: Exploratory Data Analysis in R for Data Science by Hadley Wickham & Garrett Grolemund↩

3. Big Thank You to Chaim Gluck for providing this tip↩